What is Boosting?

Boosting In Machine Learning is a variation on bagging that is only used to give greater accuracy than bagging and can cause problems with overfitting in the event that we don’t know how we can stop this process.

Types of Boosting

- Ada Boost (Adaptive Boosting)

- Gradient Boosting

- XGBoost (Extra Gradient Boosting)

Ada Boost (Adaptive Boosting)

Bagging is a process that runs in parallel, while adaptive boosting is a sequential procedure which means that the following model is based upon the current model.

The main distinction between Boosting In Machine Learning and bagging is that in bagging, we have created m bags, each with randomly selected observations, whereas when boosting, the observation of the bag is affected by the model’s performance for the previous bag. Here unlike Bagging, we use weak learners (i.e., models like very weak decision trees). The results of these learners are combined with a weighted average (for problem-solving in regression) or the weighted majority voting method (for problems with classification), unlike bagging, in which the simple method of democratic voting was used. In this case, the models that have demonstrated good performance during training are rewarded for this by giving them higher weights over the models with a higher error rate.

Process of Ada Boost

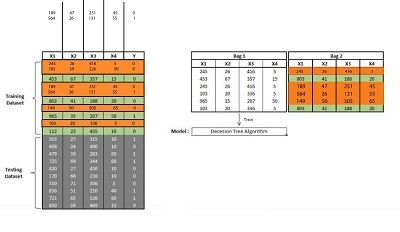

Let’s say we have 20 instances of a classification problem, of which 10 are used as the training data set to build an Ada Boost Model. The initial bag is constructed of this set of 10 observations by randomly picking six observations (drawn using a substitute). Then the entire dataset is used to train the decimal tree model. But, unlike bagging, all variables x and 10 observations from the training dataset are utilized, and the model is then applied. We discover that on the 10 observations, the Y variable in 6 observations was wrongly predicted. We made another bag; however, we added additional weights for the incorrectly classified items this time. We focus more on these observations. As a result, the probability of these observations being considered to be used in the creation of the next bag increase. This process is repeated until the set quantity of bags have been created.

In a 2-bag solution, 6 of the records are classified incorrectly, and 4 are moved to another bag. Therefore, in the beginning, the weights for all observations are the same for all observations (1/N). However, as the model progresses with the model, the weights of wrongly predicted observations are increased, and the weights for accurately predicted observations are reduced. Each weak student is required to pay particular attention to the observations that were not considered by the model before or weren’t correctly predicted.

Gradient Boost

In this method, we increase the complexity as we go through each iteration. At each stage of the process, the error of each model is computed, which is the variation of loss functions. The next stage is when the new model takes care of the flaws of the earlier weak model. These weaknesses are errors, variations, or gradients (of the loss function). Note that unlike adaptive boosting, the mistakes are not detected by giving the models weights and applying them to create new buckets. Instead, the data used for training is utilized (here, the training, as well as testing (model testing and evaluation), is not done through bootstrapping (as is done in bagging/random forests in which all the data could be utilized and the bag errors can be used to test the model) instead, it’s done via cross-classification k-fold in which each fold is considered to be the test sets, and their mean is used to determine the performance of the model) to find the data points which were not predicted (i.e., with significant residuals) in the weak initial model. Then, they are used to correct the mistake by creating a more complicated model. Gradient boosting is a method of overcoming residuals for Regression, as well as classification problems, but from a conceptual perspective, Regression that uses Gradient boosting is easily comprehended.

For instance, we face the problem of regression, and we use gradient-boosted trees on a set of data. We create a single-layer decision tree and receive an output that looks like this. It is a basic model, and we are able to observe a number of mistakes (particularly in the upper-left portion of the graph). The gradient boosting algorithm calculates the losses function’s gradients. Based on the residual error formula shown above, a brand new model is developed (here, it is created by applying a single-layered decision tree) to determine the error residuals. We can predict new data points that improve. We are able to predict information points that were not predicted correctly by the prior model (top-left right side of the graph). We could combine these two predictors and come up with the new model, which is more complicated that the model was used but also has the lowest overall error.

Therefore, we employ many weak learners to boost them into a stronger learners, where each weaker learner is trained on the mistakes caused by the weak model. Using the gradient descent optimization method, we blend those weak models and create an extremely strong and complex model. This process can be continued by formulating the error residuals for more of this model and could develop a second weak model that tries to reduce the huge error residuals. By merging the poor model and the previous two models that are weak, we could create a stronger yet more complicated model with less residuals than the model before it.

The process of Gradient Boosting

One of the drawbacks that come with Gradient Boosting is its scalability because of the sequential characteristics of the Boosting In Machine Learning process, which means it can’t be run in parallel.

Extra Gradient Boost

Extra Gradient Boosting (XGBoosting) is an enhanced method of gradient boost. It uses three types of gradient boosting: standard Gradient Boosting (discussed earlier), Stochastic Gradient Boosting (sub-sampling is performed at the column and row levels), as well as Regularization Gradient Boosting (L1 Regularization and L2 are executed). It is a powerful machine learning platform and is so efficient that of the 29 machine-learning challenges organized through Kaggle (a contest for machine learning site), winners from 17 challenges utilized the XGBoost system, with eight using XGBoost in conjunction with neural network ensembles. The advantage of XGBoost over traditional Gradient Boosting is that it’s a parallel learning technique that can save time contrasted with Gradient Boosting (It can be made parallel by making use of all the CPU cores in the process of training). It also aids in handling overfitting because it utilizes regularization techniques in both ways ( L1 and regularization with L2), which is much more efficient when there are no numbers (Sparse recognition). Cross-Validation occurs at every repetition, generally through the k-fold technique (e.g., 10 = k), in which all tenfolds are considered as the test set, and their averages are used to evaluate the end result. It is crucial to remember that XGBoosting makes use of a variety of hyperparameters that need to be improved, especially the number of base models to be developed since, after a certain amount of time, it could end up producing an overfitted model that is quite complex.

In general, XGBoost is used to implement a scalable parallel tree boosting Ensemble method. It is the most popular method for performing classification and regression.

Boosting In Machine Learning is a different excellent method for combining ensembles that is a variant on Bagging but where the outputs of the previous models affect the output of the following model. This means that the process is taught sequentially. The primary distinction between boosting and bagging can be seen in the bagging being a sequential ensemble in which each model is created independently. While boost is a sequential group approach that involves adding new models to perform in areas where previous models are weak (however, boosting can be performed in parallel, making use of an additional gradient boost). Also, both address the bias-variance problem with bagging having the aim of decreasing variance (very complex base models are used, and thus bagging is suitable for reducing the complexity/overfitting of complex models) while the aim of Boosting In Machine Learning is to decrease bias (very simple base models are used and thus boosting is used to increase the complexity of the model systematically).