FEATURE CONSTRUCTION

Introduction

It is the process of creating new variables (features) based on existing variables. This is called feature construction. Feature construction in machine learning is an important process that can provide more information and insight into the data being processed. When performing Binning, feature construction involves converting numerical features into categorical ones. Decomposing variables are also used to construct features. These variables can then be used in machine learning Projects, such as creating Dummy Variables through Encoding. You can also use pre-existing features to create new ones or take existing ones and build them from scratch. These methods can all be used to create different types of features.

- Feature Engineering

- Feature Selection

What is Feature Engineering?

The term “feature engineering” refers to the process of selecting, transforming as well as creating new features (also called variable or predictors) using raw data. These features can be used to predict the variable of interest in a machine-learning model. In this way, it involves selecting most relevant features from the raw data and then transforming them into a form that aids in making your machine-learning model to perform better.

This process has several steps, such as:

Feature selection: The process involves choosing specific features from raw data. Unrelated or redundant features may adversely affect the model’s performance Therefore, it is important to eliminate and identify them.

Feature transformation: The process involves altering the chosen features to enhance their performance or to make them more appropriate to be used in the machine learning model. Examples of techniques for feature transformation include normalization, scaling, Binning, and one-hot encoding.

Feature creation: This is the creation of new features from existing ones. It can be accomplished by manipulating or combining existing features, or by incorporating other data sources.

The aim for feature engineering is develop an array of features that can be used to identify the fundamental patterns that are present in the data, and enhances the efficiency in the model. The successful use of feature engineering is based on knowledge of the domain along with expertise on the particular subject matter in addition to an understanding of the machine-learning algorithms utilized.

What is Feature Selection?

The process that selects a subset specific features to be relevant from an overall range of features to boost the efficiency of a machine-learning model. The goal for feature selection is decrease the dimension of the information by eliminating unnecessary noise, redundant or irrelevant elements that could negatively affect the accuracy of the model or its the ability to interpret.

The importance of feature selection is that it helps decrease the risk of overfitting, increase the speed and accuracy that the model runs, as well as reduce your model’s complexity. There are a variety of methods for choosing features, which include:

Methods for filtering: Such techniques are using test results or correlation analysis. Examples of filtering methods include the chi-square test and correlation coefficient as well as mutual data.

Methods for wrapping: These techniques are used to select features according to the performance of a particular machine-learning algorithm. Examples of wrapper strategies include recursive feature removal and forward selection.

Methods embedded: These techniques require the selection of features as part of the model-training process. Methods embedded are Lasso regression, and decision tree-based techniques.

The decision to use a method of feature selection is based on the specific issue that is being addressed and the nature that the dataset has. In general this case, feature selection is a critical component of the machine-learning process that helps to enhance the efficiency and understanding of the algorithm.

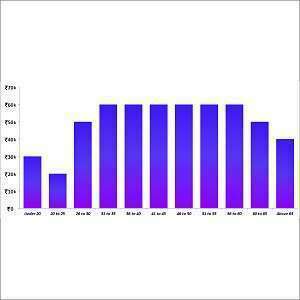

Binning, which is the opposite of Encoding, allows for new categorical features to be constructed from numerical features. Sometimes, certain numerical features are not directly usable in the learning algorithm. In these cases, they must be converted to dummy variables first. This is how we create a feature by binning, then encode it. Binning can also be used to make feature construction more responsive to different linear algorithms. They help decrease noise and increase the reliability of models. There are two types of binning: Supervised and unsupervised.

Encoding is the process of creating features by converting categorical features to numerical features. It is, in a sense, the opposite of Binning. There are two types of encoding: Binary and Target Based. We will look at various Binary Encoding methods, including One Hot Encoding, Scaler Encoder, and Encoding through dummy variables. Label Encoder and Simple Replace are two other methods. All of these methods will be explored in this blog post.

There are many ways to create new features using pre-existing features. This blog post will make you aware of some of these options. Feature Crossed is one such method. This involves creating new categorical elements by using two existing categorical functions. You can also create new features by changing the measurement unit for a feature construction. A type of feature construction is also the creation of different KPIs.

Full Stack Data Science Online Program

With Placement Assurance*

- #1 Cheapest Course in Data Science & Big Data

- Industry-Relevant Curriculum & Projects

- Instructor-Led live online class

- Online/Offline Batches

- Study Material for Each Module*

Early Bird Offer for First 111 Enrollments! Hurry Up! Seats Filling Fast