UNSUPERVISED LEARNING MODEL

In this chapter, we will learn about the details of unsupervised Learning with examples and how it differs from supervised Learning. Also, we will see an introduction to segmentation and several types of segmentation and how they differ from each other.

In unsupervised Learning, the algorithm is not predefined. Instead, a pattern is found by the algorithm to understand the data, and the result is left for the user to interpret and conclude.

- It is a type of machine-learning technique where the model is supervised on its own.

- It deals with the unlabelled data set.

- These algorithms allow the user to work on more complex processing tasks than supervised Learning.

- Unsupervised Learning is a more unpredictable method as compared to other methods.

Suppose in an unsupervised learning algorithm, and we give an input dataset that contains different images of fruits, i.e., apples and mango. The Unsupervised algorithm does not train on the dataset and thus does not have information about the dataset’s features. So, the Algorithm will train the dataset on its own. Then, it will perform the task by segmenting the image dataset into groups based on similarity.

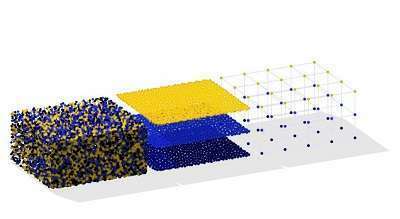

Some common steps in unsupervised Learning are Clustering, Dimensionality Reduction, and Anomaly Detection.

Clustering means grouping data into subgroups. It uses unlabelled data and identifies unknown structures in data. In Clustering, the main task is to segment customers into diverse groups. In the Clustering technique, we explore our data by finding patterns. These patterns lead to the number of diverse groups or data types in the dataset. That is, we can find the” group “or “subpopulation. “Further examining each subgroup or cluster, we can find its size and properties. Finally, these different algorithms are implemented, such as ask-means, Hierarchical, agglomerative Clustering, and DBSCAN.

It uses structural characteristics to simplify data. In this technique, we reduce the dimensions of the data without losing too much information from our original data set. The implemented algorithm is principal component analysis (PCA), Non-negative Matrix, and Factorization to perform dimensionality reduction.

Dimensionality Reduction is important in terms of substantial amounts of data.

Anomaly Detection is a clustering method such as K-Means and Gaussian Mixture Models that detect anomalies, i.e., outliers in the data.

Example: Fraudulent transactions

Suspicious fraud patterns include small clusters of credit card transactions with a high volume of attempts and small amounts at new merchants. This creates a new cluster, which is presented as an anomaly, so fraudulent transactions are happening.

Difference between Supervised and Unsupervised Learning Setup

The most significant difference between the two learning setups is that supervised learning deals with labeled data, while unsupervised learning deals with unlabelled data.

We use machine learning algorithms like K-NN, Naïve Bayes, Logistic, Decision trees, SVM for classification and regression in supervised learning. While in unsupervised learning, we have methods such as clustering. However, in contrast with supervised learning, unsupervised learning has hardly any models and evaluation methods to ensure that the model’s result is precise or correct.

Supervising the model means observing and directing the execution of a task. We perform this task by training it with data from a labeled dataset.

Example:

Predicting employees’ salary, we have data about their experience and predict salary according to that data. Experience is the dependent variable, and salary is the independent variable.

Classification learning techniques are used to predict discrete or categorical values. At the same time, regression predicts a constant value instead of a definite value in classification.

In unsupervised learning, the model works independently and tries to predict some pattern by forming different clusters or subgroups common in entire datasets. That means the unsupervised learning algorithm is trained on the dataset and concludes the unlabelled dataset. It has more difficult algorithms than supervised learning since we do not have any pre-information about the data or outcome.

Key factors distinguishing supervised and unsupervised learning

- Aim: In supervised learning, the aim is to predict the outcome from a given dataset, while in unsupervised learning, we must gain insights from a large volume of data.

- Applications: Applications such as price prediction, weather forecasting, sentiment analysis, etc., work very well with a supervised learning algorithm. But, at the same time, unsupervised learning proves ideal for image detection, anomaly detection, Finding customer segments, Feature selection, etc.

- Complexity: Supervised learning is easy to implement. It can train data on its own, unlikely unsupervised learning where we have complex algorithms to gain insights from large datasets.

- Weakness: Supervised learning needs more time to train the dataset. At the same time, unsupervised learning methods can only give accurate results if there is some validation of output variables due to human intervention.

Difference between heuristic and scientific segmentation

In segmentation, we divide the data and find groups based on similarity. There are two types of segmentation, i.e.-

- Heuristic segmentation

- Scientific segmentation

Key factors of differences

- Approach: In heuristic segmentation, we find groups based on rules, while in scientific segmentation, we find groups based on similarity with the help of algorithms.

- Complexity: Heuristic segmentation becomes complex when we work on large datasets with more than 4 variables, while scientific segmentation is easy to implement with many variables.

- Applications: Heuristic segmentation works best with applications where we need to set rules. For example, customers of a bank getting a loan are segmented based on security value and overdue loan amount. Here, heuristic segmentation works much better than scientific segmentation. In scientific segmentation, we can take the example of the iris dataset, where we divide flower properties according to similarity.