What is Bagging?

Bagging is a well-known method to generate a variety of predictors and then straightforwardly combine them. The term “bagging” comes from Bootstrap Aggregating. In this case, we employ the same learning algorithm; however, we instruct each learner with the same amount of data. Bagging is a powerful method because it is able to work with complicated models like an elaborate decision tree. (it is not the same as Boosting, which works with less robust models).

Types of Bagging Methods

There are various ways to make Bagging work by tweaking the meta-estimators, for instance.

Pasting

This produces bags (random subsets), but findings (samples) are distinct and do not repeat within each bag. This process is referred to as the creation of random subsets with no replacement. The major drawback to this technique is that each observation is not able to be repeated when making a bag. This can be a problem if the dataset isn’t large enough. Therefore, pasting can only be done when the data is extremely large.

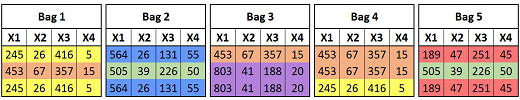

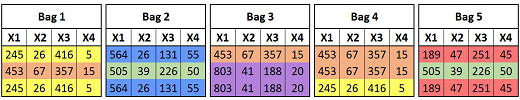

To grasp the concept, imagine an array of 10 records. First, we divide the data into test and training, including 6 records (N) to train and four for testing.

The next step is to make the m-number of the bag (sub-sets) for each bag having the size of N’. The general rule is to ensure that the N’ must be less than 60 percent. So, we need to use 3 records for each bag in this case. The 3 records that remain each time when creating bags is called record out of the bag. Out of Bag records test the model during the initial training phase (more about this in Random Forests).

We are now able to develop a model on the various datasets (for instance, we utilize KNN as the algorithm for learning) and then combine this data with a majority vote to determine the Y variable.

This means that we can calculate Y using subsets of records that have distinct records. The output will appear similar to

The output currently appears extremely complex; however, it is possible to reduce this by increasing the amount of bags.

Bootstrapping

The most well-known method is referred to as Bootstrap Bagging Aggregation. Here the sample is drawn with a replacement, creating a bootstrap sample, which means that we create random subsets or bags with each bag having some duplicate records (observations/samples). This is a standard method of statistical analysis used to calculate confidence values or confidence intervals of estimation and to understand how the variations affect the data creation.

Random Subspaces

In this way of bagging subsets, they are created by randomly choosing features rather than randomly choosing observations like was done previously.

So, each bag is made by random selection of features rather than using samples.

Random Patches

In this bagging method, the subsets are constructed by random selection of both the features and those observed (samples). When using a statistical program, it is necessary to enter the number of samples and features that will be utilized for making bags (these inputs are referred to as hyperparameters ) and are found via grid search,) as well as whether we wish to create subsets without or with replacement. The specific goal is 40-60% of the samples and features when making bags.

On this page, we’ll focus on bagging using bootstrap sampling (i.e., subsets that are drawn using replacement).

Reduced complexity by Bagging

The primary goal of group methods is to decrease overfitting, i.e., limit the bias-variance by decreasing in-depth overfitting. As we have discussed within the Regularized Regression, The model gets overfitted as it learns the training dataset. It is important to limit overfitting by decreasing the complexity of the model. In the process of bagging, this issue is dealt with to a certain degree; for instance, when we’re dealing with an issue of classification, it will be more difficult for any classifiers to remember the data because each of the classifiers is taught on its own training dataset, which was derived from the initial training dataset. As in each of these individual training datasets, some data points (observations/samples/rows of a dataset) were deliberately left out, making it different from the original training dataset, allowing the model not to memorize the original training dataset as the data point left out will be unavailable for the model to memorize. Thus, even if models derived from these distinct training datasets are extremely overfitted, they will not be able to remember the missing points. When the models are combined and merged, it will create an ensemble model that will be of the highest level of complexity. Bagging makes use of the concept of averaging (for the problem of regression) and majority vote (for problem-solving in classification) to combine the various models.

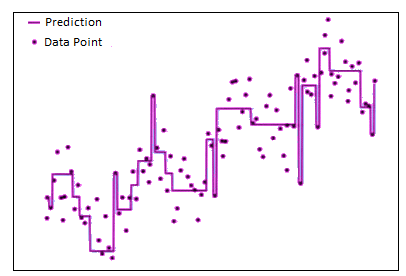

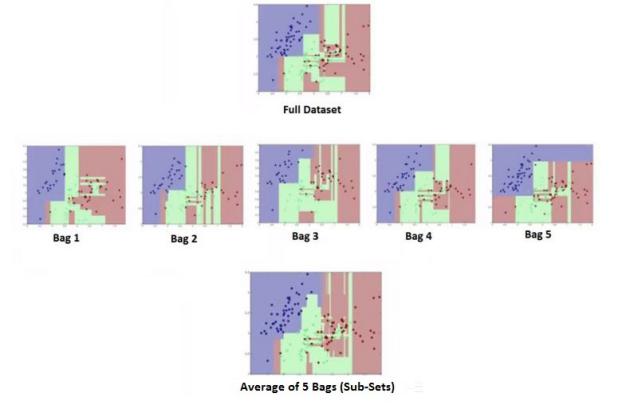

Suppose we consider using fully developed decision trees as the model-building algorithm we use to learn. These models will be very complex and over-fitted. Let’s say we start by creating five bags, then take them all together to arrive at the final result.

This is because after using bagging with a five-bag solution, we’re capable of having less complexity as compared with the complexity that comes from initial decision trees using only pure leaves. We can increase the number of bags to get more effective outcomes.

In this case, we can see it is evident that the difficulty of a model decreases with the increase in the amount of bags, even if each model is a complex and overfitted decision tree. This also aids in improving the precision of the model, for instance, in the area of classification, which decreases errors in the classification of classifiers.

Limitations of Bagging

It isn’t able to work with predictions that can be described as linear functions of input data since the mean of a linear function will be an additional linear function. In the event of a nonlinear relationship or there is a thresholded linear function, the bagging technique can be utilized.

Other drawbacks include an inability to interpret; unlike an easy decision tree that can be read separately, the ensemble method works like a black box and simply will not release the results.

Bagging is an effective approach to tackling the issue of overfitting, and subsampling techniques enhance the model’s quality. Using various learning algorithms to build models and then employ bootstrap aggregation to produce subsets or bags is possible. This power could be used to handle problems with clustering, identifying outliers, and other outliers. It can also be utilized to select variables using decision trees, as for the ultimate decision tree. The variables in the top 10 will become the top 10 most significant variables. In the backend, the bagging algorithm will determine the number of times a particular variable was within the top 10. When we aggregate these findings, we can identify the key variables.

There are alternative methods to Bagging, like Bragging, which is Bootstrap Robust Aggregating. It is commonly used in Decision Tree methods like MARS, as it produces superior results than MARS as it is original or Bagging MARS, and should also be considered.

Random Forests

Random Forest is a variation of the bagging algorithm that is specifically designed for decision trees. It makes use of bootstrapping and random subspaces in order to create different subsets. The random forest can be classified into two types: Random forests can be classified into two types:

Random Forest Regressor is used for Regression problems, and Random Forest Classifier is used for Classification Problems.

It is a very common bagging method that is thought of as a separate ensemble method, but it’s merely a way of using decision trees. Another ensemble method that uses decision trees is Extra-Trees, which will not be addressed on this site.

Random Forest is better than using Bootstrapping using decision trees since when we apply Bagging on Decision Trees, the principal problem is that if you have a huge dataset, then most of the decision trees that are learned from each bag (sub-sets) aren’t very distinct, which can cause the distinct decision trees to have the same root note. Therefore, in order to diversify the models, we need to introduce some variety within the learner. This is achieved by selecting certain characteristics at each step of the training process (i.e., in every model, different options are picked).

In Random Forests, we make subsets by randomly selecting samples, replacing them, and picking random features with no replacement. This means that each subset may contain the same samples (observations), but there are random features that are distinctive. Separate, highly complicated decision trees are constructed on these subsets and then consolidated to produce an easier, more streamlined, less overfitted decision tree with the highest accuracy.

We can design as many trees as we wish to construct within an ensemble model, but after a certain point where the returns are decreasing, it becomes difficult, and it will take a lot of time to calculate the results. The most important parameter can be defined as the number of elements that can be interpreted by the ratio of square roots to the feature count or 40% to 60% of the total elements. If the data is huge, then it’s probably not the best idea to use a fully developed tree to build various models. We can adjust the parameters to restrict the growth of trees. If we do this, it will consume long and use up many computational resources.

Random Forest is efficient for extremely large datasets with outliers, missing values, or when the classes are imbalanced. Its performance is higher than Adaptive Boosting (discussed below in the blog) in the case of outliers.

Out of Bag Error

One of the main benefits that come with Random Forest is that it eliminates the conventional method of proving the model, which is accomplished by setting aside a certain amount of data for testing, creating a model using the training data, and then applying the model to the test dataset to determine its accuracy. It can be done by making use of Out of Bag Error or OOB Error (also called Out of Bag Estimation); it is an approach to measure the accuracy of predictions for random forest or boosted decision trees as well as ensemble models that employ bootstrap aggregation to create subsets of data that can be used to train. OOB error calculates the error of each model using observations that were not used in the bootstrap samples. Sub-sampling permits the definition of an out-of-bag estimate of the prediction by evaluating the predictions from the observations that were not utilized in the construction models. A study conducted by Leo Breiman (creator of the bagging technique) has proven that the out-of-bag estimates are more accurate than using tests of similar size to those used in the learning set, eliminating the requirement for independent validation data, allowing us to make use of the whole of the data in creating random forests. In different software, it is possible to set oob_score=True (in Python), in which the model calculates the error from out of the bag when it is building a random forest.

Bagging, which mostly refers to Bootstrapping using Decision Trees, is an effective technique for ensembles and is widely employed. Random Forest is an excellent application of the Bagging method and is suitable for analyzing large, complex datasets. However, it’s not recommended for regression issues and, as mentioned previously, functions as an uncontrolled black box that has no control over the process, except to the extent that it is controlled via parameterization. Compared with boosting methods, they do not offer the advantages that other methods have, that is, the ability to focus on the observations in which errors are high. However, it is one of the most frequently used methods for ensembles.